The Jobs endpoint provides a programmatic interface to orchestrate jobs, including listing jobs, getting status for specific jobs, creating jobs, and stopping jobs.

Important: You cannot use the Jobs endpoint to interact with jobs that are scheduled in the UI Scheduler page.

Job States

Jobs run via the Jobs API can have the following states:

- PENDING: The job has been submitted but is not yet running.

- RUNNING: The job is running.

- STOPPING: The job has been stopped before finishing, and is in the process of stopping.

- DONE: The job is finished. This can indicate that the job finished successfully, was stopped, or failed.

Creating Jobs

You can create the following types of jobs with the Jobs create operation:

| Job Type | Description | Requirements |

|---|---|---|

| load | Refreshes (loads) a source dataset. | The source being loaded (refreshed) must have been previously added in the Tamr Cloud UI.

You must provide the |

| update (data product) | Runs a configured data product and updates the data product results. | The data product must have been added and configured in the Tamr Cloud UI. The data product does not have to be run in the UI before creating this job via API.

You must provide the |

| update (workflow) | Updates the Tamr RealTime system of record with either the output of a data product run or a source dataset, depending on the workflow configuration. |

The worklow must be configured by Tamr before this job can be run. You must provide the |

| publish | Publishes datasets to configured publish destinations.

Create a publish job to export data product datasets for golden records, source records, enhanced source records, and so on. For Tamr Realtime users, create a publish job to publish the RealTime datastore dataset to a configured S3 destination. |

The publish destination must be configured in the Tamr Cloud UI. Before publishing the Tamr RealTime datastore, contact Tamr ([email protected]) to configure the destination.

You must provide the

Additionally, if you are publishing a non-legacy data product, you must also supply the |

Running the Data Pipeline via API

You can run all tasks in your data pipeline by making multiple, sequential calls to the Jobs create endpoint. These tasks include refreshing source datasets, running the data product, and publishing the resulting datasets to configured destinations.

Important: For best results, leave a buffer of several hours between jobs for the same data product. The duration of each job depends on the size of the dataset and complexity of the mastering flow. Monitor how long jobs take to complete, and use that information to ensure you have an adequate buffer between jobs.

Concurrent Job Limits

Tamr Cloud supports 5 concurrent running jobs per tenant by default. Additional job requests return the following error: 429 Too Many Requests.

Obtaining Resource IDs

You must provide the ID of the associated resource when making most job requests. For example, you must provide the sourceId when submitting a job to load a source, and you must provide a jobId when stopping a running job.

Obtaining the jobId

jobIdThe job ID is included in the create request response. You can also obtain a list of job IDs by submitting a list request to the Jobs API.

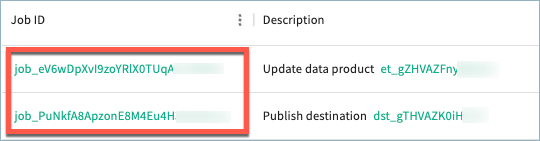

Job IDs are also available in the Tamr Cloud UI on theJobs page, as shown below. Select the ID to automatically copy it to your clipboard.

Obtaining the sourceId

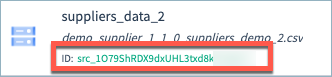

sourceIdSource IDs are available in the Tamr Cloud UI on the Sources page, as shown below. Select the ID to automatically copy it to your clipboard.

Obtaining the dataProductId

dataProductIdThe data product ID is available in the Tamr Cloud UI. Select the ID to automatically copy it to your clipboard.

Non-legacy data products

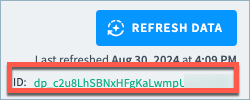

For non-legacy data products, the data product ID is available on the data product's Configure Data Product page, as shown below. See Data Product Guides for the current list of non-legacy data products.

Legacy data products

For legacy data products, the data product ID is available on the data product's Configure Flow page, as shown below. See Legacy Data Product Templates for the current list of legacy data products.

Obtaining the workflowId

workflowIdContact Tamr ([email protected]) for the ID of the configured workflow.

Obtaining the destinationId

destinationIdThe destination ID is available in the Tamr Cloud UI.

Non-legacy data products

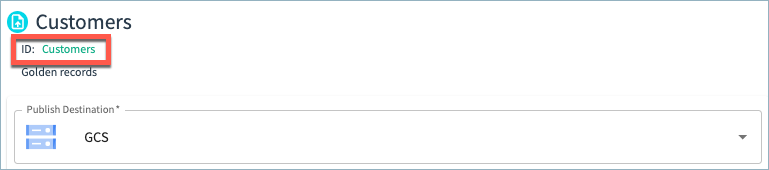

For non-legacy data products, the destination ID is the exact name of the publish configuration. This ID is available on the data product's Publish page, as shown below. Provide the exact string. For example, if the name of the configuration is Test 123, the destinationId is Test 123. Select the ID to automatically copy it to your clipboard. See Data Product Guides for the current list of non-legacy data products.

Legacy data products

For legacy data products, the destination ID is available on the data product's Publish page, as shown below. Select the ID to automatically copy it to your clipboard. See Legacy Data Product Templates for the current list of legacy data products.

Realtime datastore

For publishing the RealTime datastore dataset for Tamr RealTime, contact Tamr at [email protected] for the publish destination ID.